The Big Condensation

Disclaimer: I’ve spent my career working for firms too small to afford “proper” enterprise kit. I’ve never bought a network connection wider than a 100Mbps drop.

The cost of silicon has collapsed; power usage is under control; bandwidth isn’t an issue if you’re sensible. “Enterprise” is a misnomer, most of it is barely distinguished from what’s on sale at Dixons. Within a few years, IT in well run companies will focus almost solely on improving the use of people. CTOs of the future will spend their lives trying to cut phone support and replacement call out costs.

Presumptions that businesses need ever higher capital expenditure on processing power are now demostratably false. Developments in technology have far outstripped the true needs of organisations. Servers capacity is doubling every two years; the data any business needs to processes is not. Given that creating a business data point usually requires a real-world event, it is not physically possible for general business needs to keep up with technology.

Hardware is CHEAP

An 8-core 48GB RAM 48TB storage server costs about £10,000 from SuperMicro [an OEM server maker]. To put that into perspective, it would take 26TB to store 10 scanned A4 pages + 100 pages of typed notes on every household in Britain. The 40GB RAM would be able to store a half a page of text for each household for instantaneous retrieval. A major bank, or an airline, could not live on that one machine, but the entire UK VAT system wouldn’t strain it too much. Modern mass market kit is too cheap to matter, and you don’t need much of it to get a lot done.

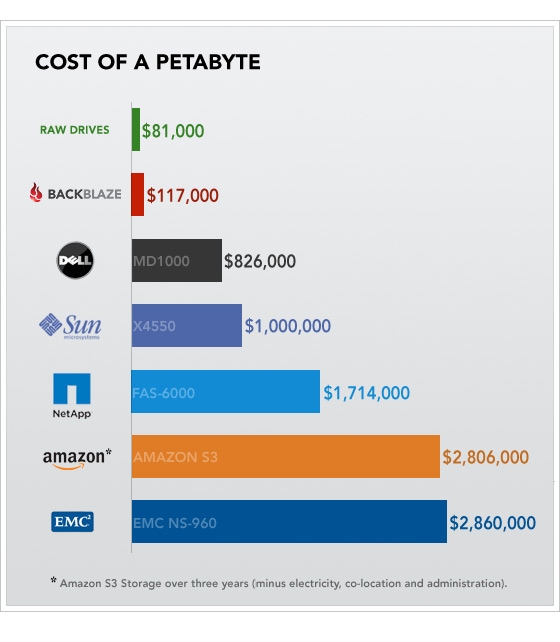

You might say “Ah, but soon we will have video phones, we will need Petabytes!”. And you’d be right, many businesses will soon require many petabytes of storage – each of which costs about $120,000 in raw hardware if you strip out the vendor mark-up.

BackBlaze Cost Estimates

(Assuming all a datacentre needs to do is store that pentabyte. If it’s not “hot” storage, it doesn’t need fast disks, RAID, etc.)

The Cloud concept is a distraction. If you can condense a FTSE 100’s systems down to a few racks of hardware, it doesn’t really matter who owns it, or if it’s at 32% or 78% capacity. The costs won’t be worth optimising. People won’t develop elaborate systems to migrate data across continents for cheaper processing. They’ll spend that time trying to cut down on support calls for broken printers.

Every 18 year old World of Warcraft addict needs better hardware than your global billing system

The real insatiable demand for computer power today is from consumers: computer games, watching high-def online video, running development tools, storing 200+ torrented movies, etc. All of this eats up ever increasing amounts of rapidly improving hardware. Business IT could never generate the demand for silicon that the games console market has. There’s more money in making FarCry prettier, and it’s a more challenging target for manufacturers to aim for.

Post-90s enterprise kit has been a small tributary off a river of high-powered consumer gadgetry. A $110k SAN from BigVendorInc is a modified laptop motherboard, a dozen small hard drives, a power supply and a plastic box. (Oh, and a sticker.) The manufacturer for those parts will sell a hundred times their SAN volume into the consumer market.

Consumers are more demanding than business and they now drive R&D.

- Intel’s power efficient Atom chips are derived from designs to make notebook battery life longer. Atom servers are in the works, but the netbook market is bigger and that’s the industry’s priority.

- The Cell Processor was developed for the PlayStation 3. They are now finding uses in weather modelling and other scientific fields.

Server hardware might be better quality and sold in larger packages, but the basic internal components are from the same production lines and basic designs.

Brief aside on bandwidth costs

Bandwidth prices still depend on route, speed and distance. Geography stops bandwidth costs from falling uniformly the way hardware can.

If we take some typical monthly prices for dedicated circuits between datacentres in the UK…

London OR Manchester Metro Areas 1Gbps circuit: £200 London TO Manchester Metro Areas 1Gbps circuit: £3100

(A 1Gbps circuit can download a 3 hour long DVD in 48 seconds.)

Or over a wider area…

And these are the prices that matter. Home internet connection past the local exchange are just agglomerations of wholesale circuits.

Bandwidth within the developed world is cheap, but not limitless. Connectivity in the developing world (where most global growth is happening) is going to be very expensive for a while yet. Even if metro area pricing has reached the point were desktops can be run centrally, any globalised organisation will have to respect network geography and costs for decades to come.

Power consumption is a legacy issue

Power consumption is currently a worry. The commodity shock last year made clear how utterly we have failed to deal with energy efficiency as an industry. Fortunately, there is a great deal of low hanging fruit. The data centres thrown together in the boom times were awful. We can easily do better.

Individual server inefficiency (and waste heat) is mostly a problem of too many, too small servers. Not the workload of those servers.

Typical Power Consumption over Workload

An almost idle server will burn 2/3rds of the power of a fully loaded one. If you look at this chart it’s easy to see why.

Power by Component

It isn’t CPU cycles eat that power. Most of it goes to the surrounding circuitry. High power costs are tax on poor datacentre consolidation. If you put an internal blog site on a six machine cluster, you’re primarily installing a storage heater. Almost none of the power will be consumed usefully. But until recently this sort of arrangement was common in corporate IT. Best practice meant buying a server for each tier of each application, then duplicating and clustering each of those.

Stuffing thousands of pizza boxes into a datacentre, each with their own fans, motherboards, disk controllers and PSUs, is very inefficient. You are basically sealing thousands of small storage heaters into large brick box and recirculating warm air over them.

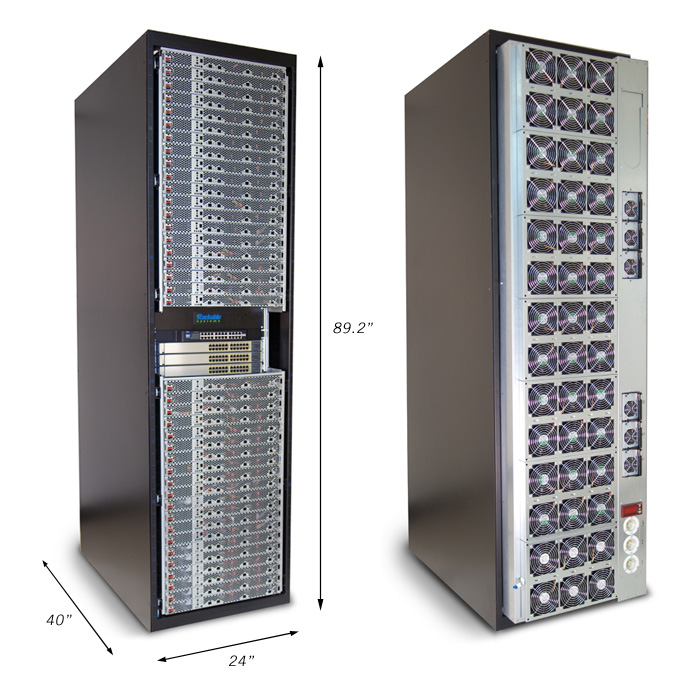

Energy use is falling rapidly for new servers. Go to any supplier’s website and try not see hardware being sold on power efficiency. New server manufacturers are pushing things further, developing high-density datacentre with shared power supplies and cooling. SGI can sell you a single rack with over 200 low power “servers” for around £180k.

A CloudRack

A lot of smart people are working on reducing data centre cooling costs. It’s being dealt with. Simple steps like separating warm/cold airflows, opening up datacentres to outside air, and planning cooling requirements realistically can help significantly. The current mess is a hangover from the boom years, not a foreshadow of crippling energy costs to come.

The only doomed option is “can’t”

Huge cost differences open up around the ability to migrate across hardware. Those who can press a button to install their systems on new boxes can migrate through the ever more efficient hardware created for the consumer market. Organisations who can’t, will accumulate legacy hardware systems who’s cost of ownership will creep up over time.

“Once authorised for processing, the batch processing nature of the VAT mainframe means that it takes a minimum of six days to process a voluntary disclosure (there are overnight updates for three separate forms).” – HMRC Meeting Minutes (2008)

The ideal mix of outsourced applications, cloud computing, smart pipes, co-located servers, etc will vary by business model, scale, technical skills, and so on – but the key differentiator will be the ability to choose that mix.

If you can copy your entire IT system to USB drives, FedEx it somewhere and re-install it, you have options. You can put you bulk processing in the cloud, your database on AS/400s, your web servers on Dells, and if something else becomes better, you can switch. Perhaps not for free, but for a controllable price.

Motivational Examples

Let’s look some firms who are small and have no legacy infrastructure.

- Skype can handle 1000-2000/database queries a second on 16 servers.

- PlentyOfFish claim 30m page views on 5 (very big) commodity servers.

- Facebook has 200m users, and is burning between $60-200m a year in servers. Even if we assume half the users are inactive, that’s $2/year per user for an application most of those users use constantly.

- Stack Overflow are managing 3m monthly unique users on 4 servers running the Microsoft stack.

People do not obey Moore’s Law

The looming bottleneck in business IT really is people. It’s difficult to reduce your need for them; they’re messy; unreliable and insanely expensive. It might be tempting to imagine a breed of motivated SuperUsers – each a little a powerhouse of tech. (Believe me, I’m happy to stop fixing printers!) But it won’t happen. Even if SuperUsers can be somehow bred in-house, there are end users outside the company firewall.

The key challenge for organisational IT in cheap infrastructure world will be finding new ways to shift admin work and responsibilities to users. Sharing my inbox with a college because I’m on holiday shouldn’t require a support ticket, neither should installing a tiny piece of pre-approved software, or publishing a simple database report. These are the big unmanaged costs that need squeezing out. Servers, meh, they’re cheap.